Insights from Amazon Science: Smarter Experimentation (Beyond your typical A/B Testing)

How to run experiments when you can't run experiments

This post to explore the practical applicability of a fascinating piece of research published by Amazon Science research paper published in 2024.

Adaptive Experimentation When You Can’t Experiment✍️ Yao Zhao, Kwang-Sung Jun, Tanner Fiez , Lalit Jain

Amazon has long been at the forefront of sustainable research—not just theoretical advances, but applied science that solves real-world problems in real time. This paper is a great example of that ethos: it tackles a major challenge businesses face, the problem isn’t just academic—it impacts a wide range of industries.

So, how can we apply this research to real-world commercial use cases?

🔍 The Challenge: When A/B Testing Fails in Business

Imagine these industry-specific scenarios where running a traditional A/B test is not straight forward:

-

Fintech (Wallet & Payments) → You want to test if higher cash-back incentives improve retention, but you can’t randomly assign different rates without confusing users.

-

Retail Banking → You want to test if a higher savings interest rate encourages deposits, but regulations (may) prevent arbitrary interest rate assignments.

-

Telecom (Mobile, Landline & Broadband) → You want to see if a mobile + broadband bundle increases retention, but forcing users into different pricing groups could backfire.

In all these cases, direct experimentation might not be feasible due to compliance risks, self-selection bias, or business constraints.

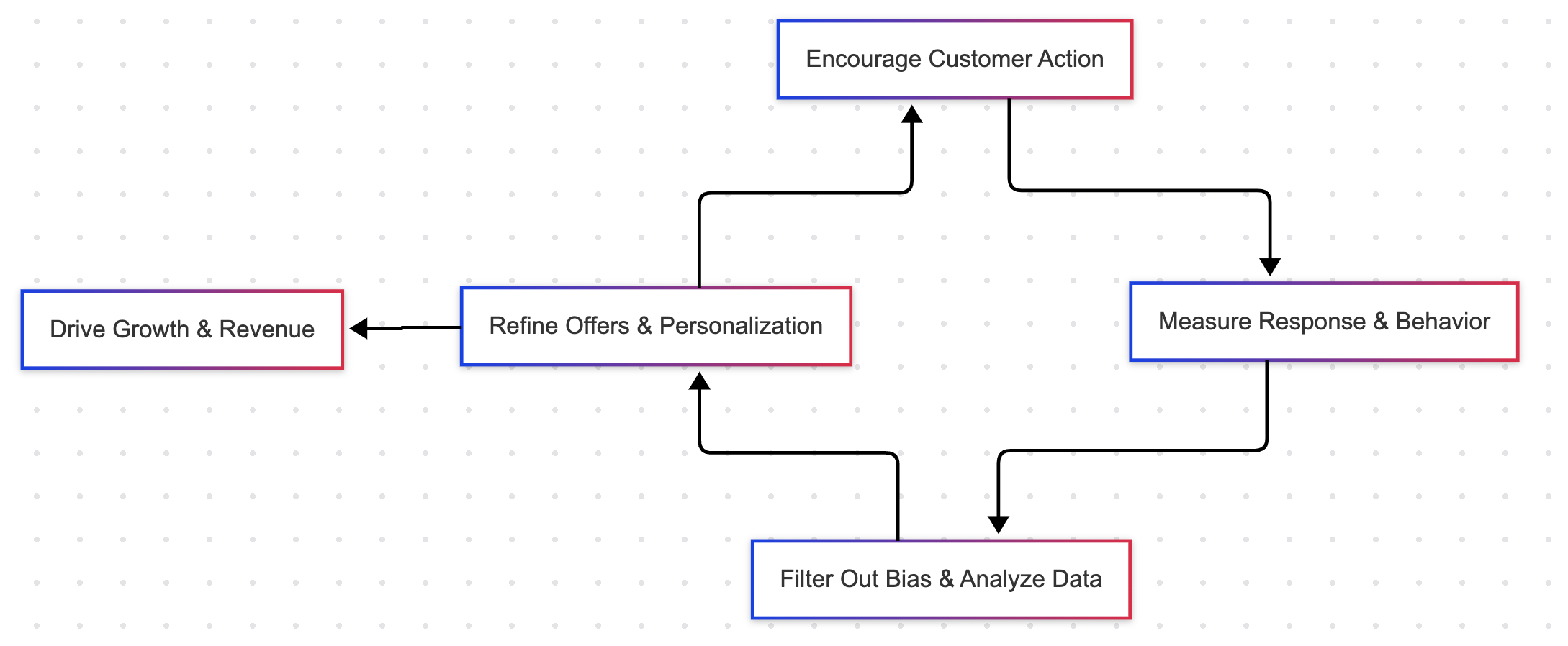

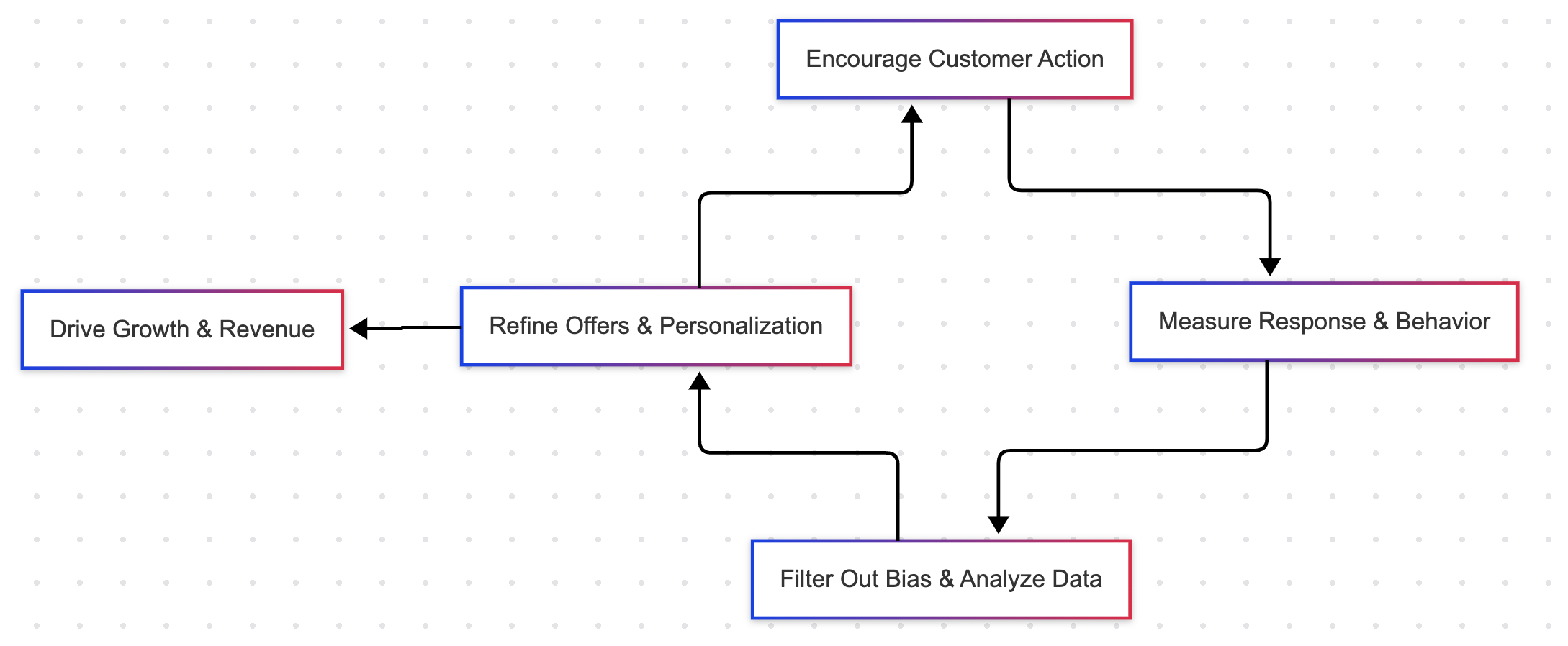

The Smarter Approach: Adaptive Experimentation

Instead of direct A/B testing, companies can use randomized encouragements to nudge user behaviour while still learning what works:

-

Fintech → Offer a subset of users a limited-time trial of higher cash-back and track who opts in & how their spending changes.

-

Retail Banking → Offer a temporary promotional rate to certain customers and measure if it leads to long-term savings growth.

-

Telecom → Give customers a 3-month free trial of a mobile + broadband bundle and analyze if they stick with it afterward.

This method allows businesses to learn customer preferences dynamically while avoiding the downsides of forced experimentation.

🔬 The Science Behind It: Filtering Out Bias

-

What makes this different from simple promotions? It’s built on instrumental variable (IV) analysis, a technique from econometrics that removes selection bias:

-

Customers self-select into offers, but we filter out natural bias (e.g., high spenders already opting for better incentives).

-

You apply an adaptive learning algorithm to dynamically adjust pricing, incentives, and bundling based on real behavior.

-

Instead of costly trial-and-error, businesses optimize in real time while staying compliant & fair.

📈 Why This Matters ?

This approach enables:

-

Faster, more cost-effective optimization (no wasted A/B test groups). 🔍 Better decision-making with minimal bias (true cause-and-effect insights).

-

Fair & transparent offers (staying within business and regulatory constraints).

-

The telecom, banking, and fintech examples all share the same fundamental challenge—A/B testing isn’t always an option, but smarter adaptive experimentation is.

BibTeX Citation:

@Article{Zhao2024,

author = {Yao Zhao and Kwang-Sung Jun and Tanner Fiez and Lalit Jain},

title = {Adaptive experimentation when you can’t experiment},

year = {2024},

url = {https://www.amazon.science/publications/adaptive-experimentation-when-you-cant-experiment},

} ⚠️ Disclaimer

The views expressed here are my own and do not represent the opinions of my past / current / future employer(s).

/ Unni